Arquivo para a ‘Inteligência Artificial’ Categoria

The paraconsistent logic

Kurt Gödel’s paradox, that a complete system is inconsistent was fundamental to a new phase in formal logical principles, and cooperated with the emergence of the computer.

a new phase in formal logical principles, and cooperated with the emergence of the computer.

It was the Peruvian philosopher Francisco Miró Quesada, unknown to many Latin American scholars, who coined the word paraconsistent in 1976.

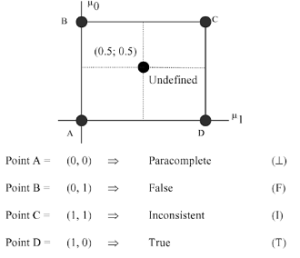

The Brazilian Newton da Costa who developed this theory has become very important in several areas, including philosophy and Artificial Intelligence. In the figure, the axis to the side that goes from u 0 and u 1 , is called degree of belief, but the truth has points A for consistent and C for inconsistent, having a lot of application to everyday life.

The study applied to semantics mainly explores paradoxes, for example, it can be said that a blind man sees certain circumstances, also the study of different forms of perception and abilities can help the parameters of the deep mind.

We have already stated that neologicism can precisely help AI in this phase of deep intelligence, for example, in the study of natural languages, the language of everyday life.

The idea that A and non-A could exist was inconceivable in Western philosophy, is the principle of the excluded third, which comes from Parmenides and was consolidated in Aristotle. Paraconsistent logics are purposively more “weak,” terms to refer to this break with classical logic, for they solve few valid propositional inferences in the classical sense of logic The logic of paraconsistent languages, however, are more conservative than those of classical counterparts, and this changes Alfred Tarski‘s hierarchy of metalanguage.

The influence on natural language was anticipated in 1984 by Solomon Feferman who stated “… natural language abounds in expressions directly or indirectly self-referential, although seemingly harmless, all of which are excluded from the, because in daily life, in truth we are paraconsistentes.

And you who say that I am

The truths of the facts only reveal in the truth of the acts, it is so for the daily life, so for the politics and for the speeches, if we live in the post-truth, it has the limit of the acts.

and for the speeches, if we live in the post-truth, it has the limit of the acts.

We like day to day to create narratives more favorable to our convenience and our ideals, but almost always the unveiling exists beyond language and speech.

The creation of a logical intelligence, in deeper layers now called Deep Mind or Deep Learning, is nothing more than the artificial response to the virtual world, part of the real next to the current one, a logic consistent with the action, the human will always be some dyslexia.

Full consciousness is linked to full dialogue, where discourses can interpenetrate in the hermeneutic circle, the difference with artificial intelligence is that the machine learns from humans, but it will be difficult for it to escape from formal logic, while the human is ontological.

This means that we are in the age of Being, a deeper manifestation of what we are, and contrary to what anti-technology discourse supposes, it is precisely this that can help human speech in the essential aspects of logic, which we sometimes falsify to be correct .

Historically technology is not displaced from human needs, it is often the poor adaptation or use of human relationship with technology that causes some disruption and misunderstanding of its true role, which is to assist the craft, art and technique, says the Greek origin of the word techné.

In the biblical passage that Jesus tests his apostles, he asks: “And you who say that I am” (Mt 16:15), for some was a great prophet, for others a return from Elijah or even from Moses, not always God , that is, the Divine wisdom between us.

The reprehensible use of the evangelical message in politics is not for the fact that they should be outside the interests of the common good and of society in general, but it is the possibility of instrumentalizing and using in favor of a certain discourse that is not always coherent with the gospel

Is it possible measure the evolution of AI ?

Now it seems that yes, it is necessary to establish more precise tests and exactly the accuracy of these tests, but researchers of the Computer Vision Lab, of the Federal Institute of Technology of Zurich have created a test system (benchmark) that tests the performance of neural network´s platforms used to perform common Artificial Intelligence tasks, such as answering questions, finding certain data, and other smartphone applications already used in common neural network tasks.

of these tests, but researchers of the Computer Vision Lab, of the Federal Institute of Technology of Zurich have created a test system (benchmark) that tests the performance of neural network´s platforms used to perform common Artificial Intelligence tasks, such as answering questions, finding certain data, and other smartphone applications already used in common neural network tasks.

The idea is to measure the performance of AI systems, such as and done on today’s computers and on applications useful in smartphones, which use Artificial Intelligence.

The basic plan + and measure the performance in the systems, as already done for some answers: time, locators, flights, restaurants, etc. and growing in complexity, which will make manufacturers and application vendors make their models more sophisticated.

The application calls AI Benchmark and allows, among other things, the comparison of the speed of AI models to run on different Android smartphones, punctuating performance, already found in the Google Play app.

The device that has the dedicated AI chip dedicated to some devices (at least with Android OS 4.1 installed already will be fast enough to do these functions.

Some applications may be more complex, for example sorting images with easy recognition and the ability to segment and improve photos, indicating that this type of application can grow and evolve to more complex applications.

AI learning with animals

Beavers, termites and other creatures build structures in response to environmental problems, the idea of using these strategies in autonomous robots was made by researchers at the University of Buffalo. In the new system the robot continuously monitors and modifies its terrain to make it more mobile, similar to the beaver that reacts to the water flow building a dam, what kind of intelligence is this, Thomas Nagel would ask what it’s like to be a beaver?

the idea of using these strategies in autonomous robots was made by researchers at the University of Buffalo. In the new system the robot continuously monitors and modifies its terrain to make it more mobile, similar to the beaver that reacts to the water flow building a dam, what kind of intelligence is this, Thomas Nagel would ask what it’s like to be a beaver?

The problem is not so simple and the algorithms must change when there are unpredictable and complex spaces, for this resorted to a biological phenomenon called stigmatics, which is an indirect co-ordination reacting to a problem, biologists and zoologists study this. Researchers using this new algorithm, equipping the robot with a camera, a specialized software and a robotic arm that lifts and deposits objects, placed bean bags of different sizes around the area, in 10 tests, the robot changed from 33 to 170 bags, each time creating new ramps to reach their destination.

A release of this work is on the University of Buffalo website, and the paper will be presented last week (June 25-30) at the Robots: Science and Systems conference in Pittsburgh.