Inform before knowing and knowing

In times of cultural crisis, information is abundant and not always true, knowledge is limited and wisdom is scarce.

is limited and wisdom is scarce.

Although it is common sense that for knowledge it is necessary to inform, little is known about what is information, and little knowledge time little chance to become wisdom, then overflows the “maxims” (sentences made out of context), self-help (name unfit for self-knowledge) and fundamentalism, misery of philosophy.

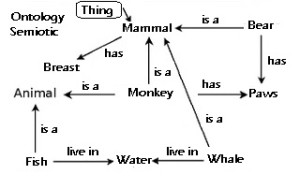

There are many possibilities for information, but if we consider it as a present in the life of beings, then a definition refers to the origin of the word in Latin stem (information-) of the nominative (informatio): (delineate or conceive idea) is understood as giving shape or molding in the mind, where it is in-form, being is ontological informationn (see example in Picture).

However, this format is in language, idioms or some form of signs this information already exists, and this relation has a semiotic root given by Beyno-Davies where the multifaceted concept of information is explained in terms of signs and systems of signs- signals.

For him, there are four interdependent levels, or layers of semiotics: programmatic, semantic, syntax, and empirical (or experimental, which I prefer because it is not empiricism).

While Pragmatics is concerned with the purpose of communication, Semantics is concerned with the meaning of a message conveyed in a communicative act (which generates an ontology) and Syntax is concerned with the formalism used to represent a message.

The publication of Shannon-Weaver information in 1948, entitled The Mathematical Theory of Communication is one of the foundations of information theory where information not only has a technical but also a measure of meaning and can be called a signal only.

It was Michael Reddy who observed that ” signs ” of mathematical theory are ‘patterns that can be changed’, in this sense are codes, where the message contained in the sign must express the ability to ‘choose from a set of possible messages’, not having meaning, since it must be “decoded” among the possibilities that exist.

It must have a certain amount of signals to be able to overcome the uncertainty of the transmitted signal, it will be known if the ratio between signal and noise (present in the encoding and transmission of the signal) is relevant and the degree of entropy has not destroyed the transmitted signal.

The theory of communication analyzes the numerical measure of the uncertainty of a result, so it tends to use the concept of information entropy, generally attributed to Claude Shannon.

Knowledge is one where information has already been realized ontologically.