Arquivo para a ‘Computer – Software’ Categoria

Possible and impossible changes

At other times, the proportions, the changes that occurred in previous stages, also caused a strong impression on the people, but the most influential disruptive technologies, glasses and telescopes, allowed the first printed books to be read, and thanks to the telescopes, the Copernican revolution took place.

impression on the people, but the most influential disruptive technologies, glasses and telescopes, allowed the first printed books to be read, and thanks to the telescopes, the Copernican revolution took place.

The change of paradigms that happens causes astonishment, but what needs to be done in fact, what is possible in a more distant reality and what can happen in the next years, I already indicated in some post, The “Physics of the impossible”, by Michio Kaku (2008).

The author quotes Einstein’s phrase at the beginning of this book: “If an idea does not seem absurd at first, then it will not have any future,” it takes a strong and shocking thought like this to understand that if we should bet on innovation, is the historical moment of this, you must understand that most disruptive things will initially be absurd.

Speaking of more distant things, at the beginning of the microcomputers, it was stated that they would not be useful to many people, the mouse was clumsy and “little anatomical” when it appeared, and there is still a lot of distrust in “artificial intelligence”, not only between laypeople on the subject, among scholars as well, others idealize an “electronic brain”, but neither Sophia (the first robot to have citizenship) and Alexa Amazon really has “intelligence.”

What has to be stopped, and this in Copernicus’s time was worth for the theocentric vision, today there is also an anti-technology sociopathy that borders on fundamentalism, if there are injustices and inequalities they must be combated on the plane in which they are in the social and political.

Roland Barthes said that every denial of language “is a death”, with the adoption of technology by millions of people this death becomes a conflict, first between generations, and then between different conceptions of development and education.

To the scholars I make the recommendation of Heidegger, affirmed on the radio and the television that only half a dozen people understood the process and of course with the financial power can control the publishings of these medias, but also one can answer in the religious field.

The reading of the evangelist Mark, Mc 16,17-18 “The signs that will accompany those who believe will be these: they will cast out demons in my name, they will speak new tongues; If they take snakes or drink any deadly poison, it will not hurt them; when they lay their hands on the sick, they shall be healed. ”

This needs to be updated for the new interpretations of this bible text.

KAKU, M. (2008) The Physics of the Impossible: a scientific exploration of the world of fasers, force fields, teleportation, and time travel. NY: Doubleday.

Trends in Artificial Intelligence

By the late 1980s the promises and challenges of artificial intelligence seemed to crumble Hans Moracev’s  phrase: “It is easy to make computers display adult-level performance on intelligence tests or play checkers, and it is difficult or impossible to give them the one-year-old’s abilities when it comes to perception and mobility, “in his 1988 book” MInd Children. ”

phrase: “It is easy to make computers display adult-level performance on intelligence tests or play checkers, and it is difficult or impossible to give them the one-year-old’s abilities when it comes to perception and mobility, “in his 1988 book” MInd Children. ”

Also one of the greatest precursors of AI (Artificial Intelligence) Marvin Minsky and co-founder of the Laboratory of Artificial Intelligence, declared in the late 90s: “The history of AI is funny, because the first real deeds were beautiful things, a machine that made demonstrations in logic and did well in the course of calculation. But then, trying to make machines capable of answering questions about simple historical, machine … 1st. year of basic education. Today there is no machine that can achieve this. “(KAKU, 2001, p 131)

Minsky, along with another AI forerunner: Seymor Papert, came to glimpse a theory of The Society of Mind, which sought to explain how what we call intelligence could be a product of the interaction of non-intelligent parts, but the path of AI would be the other, both died in the year 2016 seeing the turn of the AI, without seeing the “society of the mind” emerge.

Thanks to a demand from the nascent Web whose data lacked “meaning,” AI’s work will join the efforts of Web designers to develop the so-called Semantic Web.

There were already devices softbots, or simply bots, software robots that navigated the raw data looking for “to capture some information,” in practice were scripts written for the Web or the Internet, which could now have a nobler function than stealing data.

The idea of intelligent agents has been revived, coming from fragments of code, it has a different function on the Web, that of tracking semi-structured data, storing them in differentiated databases, which are no longer Structured Query Language (SQL) but look for questions within the questions and answers that are made on the Web, then these banks are called No-SQL, and they will also serve as a basis for Big-Data.

The emerging challenge now is to build taxonomies and ontologies with this scattered, semi-structured Web information that is not always responding to a well-crafted questionnaire or logical reasoning within a clear formal construction.

In this context the linked data emerged, the idea of linking data of the resources in the Web, investigating them within the URI (Uniform Resource Identifier) that are the records and location of data in the Web.

The disturbing scenario in the late 1990s had a semantic turn in the 2000’s.

KAKU, M. (2008) The Physics of the Impossible: a scientific exploration of the world of fasers, force fields, teleportation, and time travel. NY: Doubleday.

The singularity and the tecnoprofetas

Before Jean-Gabriel Ganascia talked about the Myth of Singularity, the idea that machines would surpass man in human capacity, had already been analyzed by Hans Moracev in his work: Men and Robots – the future of human interfaces and robotics, careful and ethical Ganascia did not fail to quote him.

machines would surpass man in human capacity, had already been analyzed by Hans Moracev in his work: Men and Robots – the future of human interfaces and robotics, careful and ethical Ganascia did not fail to quote him.

There are groups that study the ethical issues that this involves as the Center for the study of existential risk at Cambridge University, but also groups engaged in this project with University of Singularity, with weight sponsors such as Google, Cisco, Nokia, Autodesk and many others, but there are also ethical studies such as the Institute for Ethics and Emerging Technologies that Ganascia participates in, and the Institute of Extropia.

In the account of technoprofetas, the word was coined by Ganascia, a genius prejudice Kurzweil is one of the most extravagant, injected drugs in the body preparing to receive the “computational mind”, but with prediction for 2024 already spoke and now it is for 2045 to 2049 , something that is incredible for someone who is said to have no beliefs, for this fact is very distant if it occurs.

Ganascia thinks this is a false prophecy and Moracev analyzes the difficult real possibilities.

The Gartner institute that works on forecasting predicts neural computing still crawling with predictions for 20 years from now, interfaces like Amazon’s Alexa and Hanson’s Sophia, are human interaction machines that learn things from everyday language but are far from the so-called machines of a general artificial intelligence, because human reasoning is not a set of propositional calculations as they think.

One can argue more this is because people are illogical, but this according to what logic, what we know is that men are not machines and what we ask if machines are men, is the essential question that inspired the series Blade Runner, the book of Philip K. Dick “Do androids skeep dream of electric sheep” from 1968, which inspired Blade Runner.

I think dreams, imagination and virtuality are faces of the human soul, robots have no soul.

History of the algorithm

The idea that we can solve problems by proposing a finite number of  interactions between several tasks (or commands as they are called in computing languages) for several problems originates in Arithmetic.

interactions between several tasks (or commands as they are called in computing languages) for several problems originates in Arithmetic.

Although the machine of Charles Babbage (1791-1871) and George Boole’s (1815-1864) Algebra make a huge contribution to modern computers, most logicians and historians of the birth of the digital world agree that the problem of fact was raised by David Hilbert’s second problem (1962-1943) at a 1900 conference in Paris.

Among 23 problems for mathematics to solve, some recently solved such as Goldbach’s Conjecture (see our post), and others to solve, the second problem was to prove that arithmetic is consistent, free from any internal contradiction.

In the 1930s, two mathematical logicians, Kurt Gödel (1906-75) and Gerhard Gentzen (1909-1945) proved two results that called new attention to the problem proposed, both referring to Hilbert, so in fact, there is the origin of the question, roughly, if an enumerable problem is solved by a finite set of steps.

In fact, Gentzen’s solution was a proof of the consistency of Peano’s axioms, published in 1936, showing that the proof of consistency can be obtained in a system weaker than the Zermelo-Fraenkel theory, used axioms of primitive recursive arithmetic , and is therefore not general proof.

The proof of the inconsistency of arithmetic, called Gödel’s second incompleteness theorem, is more complete and shows that some proof of the consistency of Peano’s axioms can be developed without this arithmetic itself.

This theorem states: if the only acceptable proof procedures are those that can be formalized within arithmetic, then Hilbert’s problem can not be solved, in other more direct form, if the system is complete or consistent.

There are polemics raised about these results, such as Kreisel (1976) who argued that the proofs were syntactic for semantic problems, Detlefsen (1990) who says that the theorem does not prohibit the existence of a proof of consistency, and Dawson (2006) that the proof of consistency is erroneous using the evidence given by Gentzen and Gödel himself in 1958 work.

The controversies aside, Kurt Gödel’s participation in the important Vienna circle in the 1920´s before the war exploded, and the subsequent discussions of his theorem by Alain Turing (1912-1954) and Claude Shannon (1916-2001) underline its importance for the history of algorithms and modern digital computer.

The technologies that will dominate 2018

One is undoubtedly the order of the day, but it must grow until 2021, it is Virtual and Augmented Realities, with the difference that the first is the creation of a totally virtual environment while the second is an insertion of virtualities in the real environment, the Pokemon Go, second version of the little monsters grew in 2017.

Virtual and Augmented Realities, with the difference that the first is the creation of a totally virtual environment while the second is an insertion of virtualities in the real environment, the Pokemon Go, second version of the little monsters grew in 2017.

Estimates from research groups such as Gartner and TechCrunch, is market will move more than 100 billion dollars by 2021, as next year is from Copa, Japan by example promises unprecedented broadcasts to 2022 in Quatar.

The internet of things is increasing its possibilities, how much we thought that the technology of networks 5G was distant in the USA already is in operation in many places and could be a reality next year, with this the internet of the things that depends on this transmission efficiency can reach new directions such as water systems, low cost energy and sophisticated traffic control systems, finally entering the IoT (Interner of Things) in people’s lives.

Another concern, but we do not know if the systems will become more efficient, are the security systems this year WannaCry affected telephone systems and FedEX, among others, reaching up to affect more than 150 countries, there are promises for 2018. Data production reached 2.5 exabytes per day (1 exabyte = 10 ^ 18 bytes), and BigData’s technology came to stay, but an important ally in the handling and handling of this data should be Artificial Intelligence (in Picture vision abourt polygonal brain), the intelligent agents that will dominate the Web 4.0 should appear this year, but the forecast to become reality on the Web is for 2020.

3D printer and nanotechnology are already reality, but should move forward, as well as the early reality of the 5G internet, we posted yesterday yesterday the “novelty” gadgets, the conceptual smartphone and the 360-degree camera, in technology sometimes surprise these things bombing the practical reality.

The technology is part of the history of humanity, we will post tomorrow about the year 2017, in facts.

Art, autonomy and see

OWhat remains veiled in recent art and which is present in Hegel’s  discourse, and even more so in the apparent rupture with the departure of the “plane” to the three-dimensional forms of the “polite” is still an idealist art of what Hegel called “autonomy” and that due to this idealism, Rancière called “autonomization.”

discourse, and even more so in the apparent rupture with the departure of the “plane” to the three-dimensional forms of the “polite” is still an idealist art of what Hegel called “autonomy” and that due to this idealism, Rancière called “autonomization.”

Rancière clarifies that it is autonomization: “one of these elements, the ‘breakdown of the threads of representation’ that bound them to the reproduction of a repetitive way of life. It is the substitution of these objects for the light of their appearance. From that point on, what happens is an epiphany of the visible, an autonomy of the pictorial presence. “(Rancière, 2003, p.87)

This autonomization is ultimately the famous “art for art” or in the opposite sense of “utilitarianism of art,” but both can not deny either the specific aspect of art and its connection with words or their usefulness “as useful as useful, “the writer Vitor Hugo would say, but it is about accepting the emancipation of the spectator through” interaction. ”

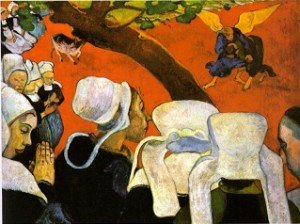

See Rancière writes in art: “Whether it be a Descent from the Cross or a White Square on a white background, it means to see two things at once” (Rancière, 2003, p.87), which is a relation between “the exposition of the forms and surface of inscription of words. “(ibidem), where the presences are” two regimes of the braid of words and forms. ”

To understand the problem of vision Rancière uses the painting of Gauguin Peasants in the field, there is a “first picture: peasants on a picture look at the fighters in the distance” (Rancière 2003: 95), the presence and the way they are dressed show that it is something else then a second picture arises: “they must be in a church” (idem), to make sense the place should be less grotesque and realistic and regionalist painting is not found, then there is a third picture: “The spectacle that it represents has no real place. It is purely ideal. Peasants do not see a realistic scene of preaching and fighting. They see – and we see – the Voice of the preacher, that is, the Word of the Word that passes through this voice. This voice speaks of Jacob’s legendary combat with the Angel, of terrestrial materiality with heavenly ideality. “(Ibid.)

In this way, Rancière affirms, the description is a substitution, the word for the image, and substitutes it “for another living word, the word of the scriptures” (Ranciere, 2003, 96)

It also makes a connection with the pictures of Kandinsky,he writes In the space of the visibility which his text constructs Gauguin’s painting is already a picture like those that Kandinsky will paint and justify: a surface in which lines and colors become more expressive signs obeying the unique coercion of ‘inner necessity'” (Rancière, 2003, p. 97), and we have explained earlier that it is not pure subjectivism because it makes a connection with both the inner thought and the thought about the description in the picture.

The important thing is the symbiosis between the image, the words and the vision resulting from a “unveiling” of the image that can be translated into words.

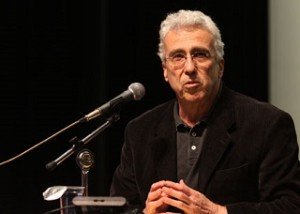

Are machines becoming humanized?

Summary of Lecture by prof. Dr. Teixeira Coelho from IEA – USP at the EBICC event.

event.

Are machines becoming humanized, or are they the human beings that are losing humanity and transforming into immediate, as valuable, as excessive and totally subdutable products of which the world is full?

The lecture presented as results of the study group Computational Humanities of the Institute of Studies

Advanced of USP a list of concepts, most with a critical view of technology, terms such as: digitization, mobility, automation, augmented reality, proxy effect, duplication, anonymity, perfectibility, rationality, coordination, unification and completeness, among others, like the result of an e-culture.

He discussed the contemporary reality of computational and digital cultures and their relation to cultural production, mediated or self-produced, in a context where the work of robots replaces the manual work of humans and goes to replace, through artificial intelligence, intellectual work .

Gregory Chaitin then gave his talk, already discussed in the previous post.

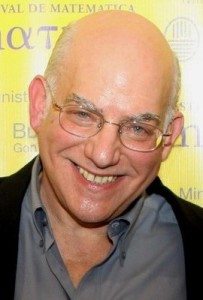

Consciousness and information

The EBICC event receives today (October 31) the lecture by Gregory Chaitin, mathematician and computer scientist who re-presented Gödel’s Incomplete Theoremis considered to be one of the founders of what is today known as Kolmogorov (or Kolmogorov-Chaitin) complexity together with Andrei Kolmogorov and Ray Solomonoff. Today, algorithmic information theory is a common subject in any computer science curriculum.

mathematician and computer scientist who re-presented Gödel’s Incomplete Theoremis considered to be one of the founders of what is today known as Kolmogorov (or Kolmogorov-Chaitin) complexity together with Andrei Kolmogorov and Ray Solomonoff. Today, algorithmic information theory is a common subject in any computer science curriculum.

Abstract:

He make a review applications of the concept of conceptual complexity or algorithmic information in physics, mathematics, biology, and even the human brain, and propose building the universe out of information and computation rather than matter and energy, which would be a world view much friendlier to discussions of mind and consciousness than permitted by traditional materialism.

The foundations of his thinking is showed in the book: Meta Math!: The Quest for Omega (Pantheon Books 2005) (reprinted in UK as Meta Maths: The Quest for Omega, Atlantic Books 2006.

AI can detect hate speech

It is growing in the social media hate speech, identifying it with a single source can be dangerous and biased, because of this, researchers from Finland trained a learning algorithm to identify the discourse of hate by comparing it computationally with what differentiates the text which includes discourse in a system of categorization as “hateful.”

can be dangerous and biased, because of this, researchers from Finland trained a learning algorithm to identify the discourse of hate by comparing it computationally with what differentiates the text which includes discourse in a system of categorization as “hateful.”

The researchers used the algorithm daily to visualize all the open content that candidates in municipal elections generated on both Facebook and Twitter.

The algorithm was taught using thousands of messages, which were cross-checked to confirm the scientific validity, according to Salla-Maaria Laaksonen of the University of Helsinki: “When categorizing messages, the researcher must take a position on language and context and therefore it is important that several people participate in the interpretation of the didactic material “, for example, make a hateful speech to defend themselves from an odious action.

The algorithm was taught using thousands of messages, which were cross-checked to confirm scientific validity, explains Salla-Maaria: “When categorizing messages, the researcher must take a position on language and context and therefore it is important that several people participate in the interpretation of the didactic material “, otherwise the hatred can be identified only unilaterally.

She says social media services and platforms can identify hate speech if they choose, and thus influence the activities of Internet users. “there is no other way to extend it to the level of individual citizens,” says Laaksonen, that is, they are semi-automatic because they predict human interaction in categorization.

The full article can be read on the website of Aalto University of Helsinki.

Androids hunter is replicating

The replicant means a machine with humanoid features and in few things besides the feature approaches the human, in the case of Blade Runner androids 2049, the eye or rather the bottom of the iris that has an orange tone is the great differential, but being a machine has characteristics that are superhuman, for example, strength, speed and many other characteristics may be human, but robots would have soul?

feature approaches the human, in the case of Blade Runner androids 2049, the eye or rather the bottom of the iris that has an orange tone is the great differential, but being a machine has characteristics that are superhuman, for example, strength, speed and many other characteristics may be human, but robots would have soul?

Or ask the most common question in the media, robots slept counting sheep that are machines, though the strongest question since the beginning of the first version of the 1982 movie, is whether Rick Deckard (Harrison Ford), the android hunter is a also android.

A question that the director has already answered affirmatively, but there is a hint in the film when he tells us that he dreamed of a unicorn (the reference is already by our classification a replicant because it has superhuman things like a horn), and someone already knew his dream, that is, the replicants have even their projected dreams, but because the “creator” has preserved it, a question that he himself makes.

Or another dialogue by Rick Deckard clarifies: “Replicants are just like any other machine – they are a benefit or a danger. If they are a benefit, it is not my problem “, that is, it is important to build a machine so that it can take care of the danger that others offer.

But this is enlightening in another point as well, there are humans worried about the machines and this means that without knowing that there are dangers, in the dialogue Deckard says like any other should not be a hindrance to its existence, the bottleneck in the background is fear, for this in my opinion there is always this somber tone in the first and second version, called by the cult refinement of “noir”.

Machines and advances always bring problems, they dislodge things from the comfort zone, but there is no way to make an omelet without breaking the eggs, you have to see the ones that are rotten, the “dark” computers of the 2001 Odyssey have passed, the androids will pass , the future belongs to us, man is the protagonist of his future, or at least he must wish to be.